Blog@Edge

I hate waiting.

I hate waiting in line.

I hate waiting in traffic.

And I hate waiting for simple websites to load.

When I initially looked into creating my own blog, I took all that frustration and angst against “waiting” to ensure users would never have to wait to load my blog, no matter where in the world they read it from. I made my mission to kill off as much latency as I could. Of course, I needed to leverage a Content Distribution Network (CDN).

In 2020, Google’s CDN is largely considered the fastest in the industry. Unfornately, I decided to compromise my values from the start. I exchanged familiarity with AWS for the superior performance offered by Google and ended up going with Amazon’s CDN product, CloudFront. Keep in mind, Google’s only faster by a few milliseconds according to cdnperf.com on 5/25/2020.

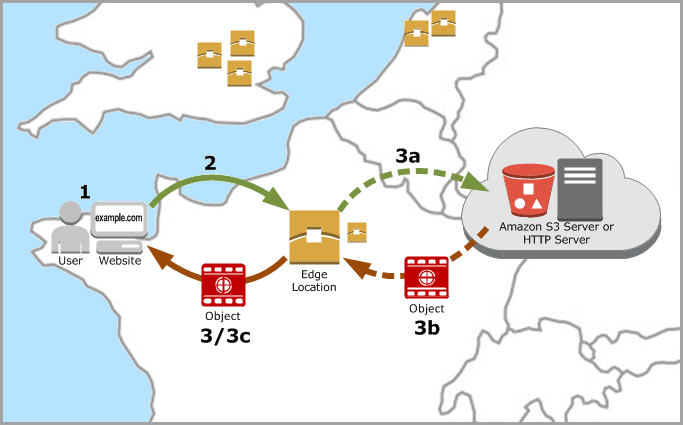

Moreover, as of May 2020, the AWS footprint is impressive - it maintains 24 regions (i.e., the major data centers), but also offers over 216 “Points of Presence” (PoP) - 205 Edge Locations, 11 Edge Caches (i.e., CDN delivery servers). When a user requests a resource, they are first directed to a PoP to see if that resource is cached at that location. If it doesn’t exist, it makes a “roundtrip” request to the “origin server” specified by Cloudfront to retrieve that resource. As that resource passes through the PoP, it is then cached at that location for any subsequent requests made by users under that PoP.

Most would say that just hosting a blog on a static server and delivering it via CDN would solve most of your latency problems. Not me though, my microblog had to be blazingly fast!

I focused it on a singular problem: getting rid of those pesky milliseconds it takes to make “roundtrips” to a Region.

No “origin server” for me - my data would always live at the Edge regardless of the user. In this microblog, I would break every sensible convention to prove a moot point (yes, I realize its all very silly now - considering I have yet to find an effective way to profile the improved performance on my approach).

One important detail you need to understand is that Cloudfront offers the ability to run Lambda functions. Lambda@Edge is not a new feature, but it has not been exploited to the same extend of traditional Lambda functions. Particularly, there are restrictions as of May 2020 that make them still a little difficult to work with. 2 of the 4 event types are more limited than their counterparts.

Viewer Request and Viewer Response event quotas:

- Function memory size: 128mb

- Function timeout: 5sec

- Size of a response (including headers and body): 40kb

- Maximum compressed size of a Lambda function and any included libraries: 1mb

- Read more about limits

With all that said, I created Blog@Edge - blog pages that are served from a single Lambda@Edge function.

There are obviously some major cons to this entire approach (slow deployments, no caching of pages). This is just to demonstrate a concept.

Check out the blog here: http://d18ysengjg5rtu.cloudfront.net/